System Overview

A real-time computer vision system that interprets sign language gestures through webcam input, converting them into text and audio output. Leveraging MediaPipe's hand tracking and custom gesture recognition algorithms, the system achieves 95% accuracy in controlled environments.

Technical Implementation

- ▹ MediaPipe Hands pipeline for 21-point hand landmark detection

- ▹ Custom angle-based gesture classification algorithm

- ▹ Flask backend with WebSocket for real-time video streaming

- ▹ History tracking with export functionality

Core Features

Real-time Processing

Processes webcam input at 30 FPS with MediaPipe hand tracking, delivering instant gesture recognition with under 50ms latency

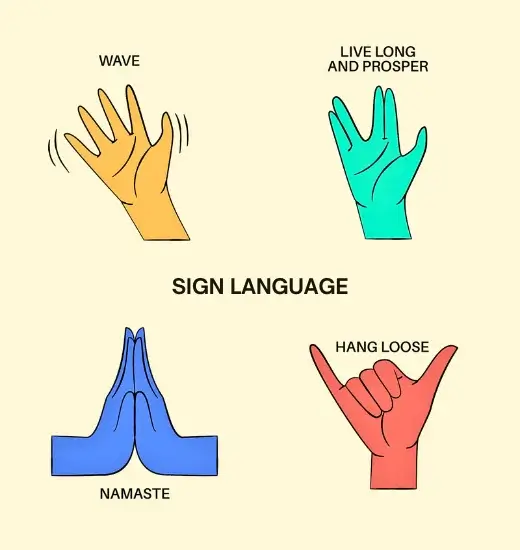

Gesture Library

Recognizes 15+ essential ASL gestures including letters, numbers, and common symbols like "OK" and "Peace"

Session History

Maintains timestamped detection log with export options (TXT/CSV) for review and analysis

Web Accessible

Responsive web interface works on all devices with modern browsers, deployed on AWS EC2 for global access

Technical Deep Dive

Gesture Recognition Pipeline

- Hand landmark detection using MediaPipe

- Angle calculation between key joints

- Finger state classification (extended/folded)

- Geometric pattern matching

- Temporal smoothing of results

Performance Metrics

Challenges & Solutions

⚠️ Lighting Variations

Implemented adaptive brightness normalization and histogram equalization to handle different lighting conditions

⚡Real-time Performance

Optimized MediaPipe configuration and implemented frame skipping to maintain 30 FPS on low-end devices